“En-caching” the RAN – the AI way

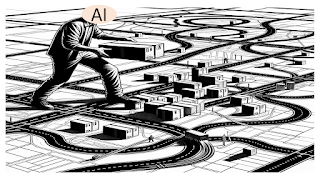

RAN caching is an intuitive use-case for AI. Our report “AI and RAN – How fast will they run?”, places caching third in the list of top AI applications in the RAN. There is seriously nothing new about caching. In computing analogy, caching is as old as computing itself. The reason caching and RAN are being uttered in the same breath is primarily MEC. MEC is a practical concept. MEC attempts to leverage the distributed nature of the RAN infrastructure in response to the explosion in mobile data generation and consumption. Caching looks at practically every point in the RAN as a possible caching destination – it can be base stations, or RRHs, or BBUs, or femtocells, or macrocells and even user equipment. The caching dilemma is a multipronged – what to cache, where to cache and how much to cache. In an ideal world, one could have access to infinite storage and processing capacity interconnected with infinite throughput at zero latency. In the real world however, each of the...